Introduction

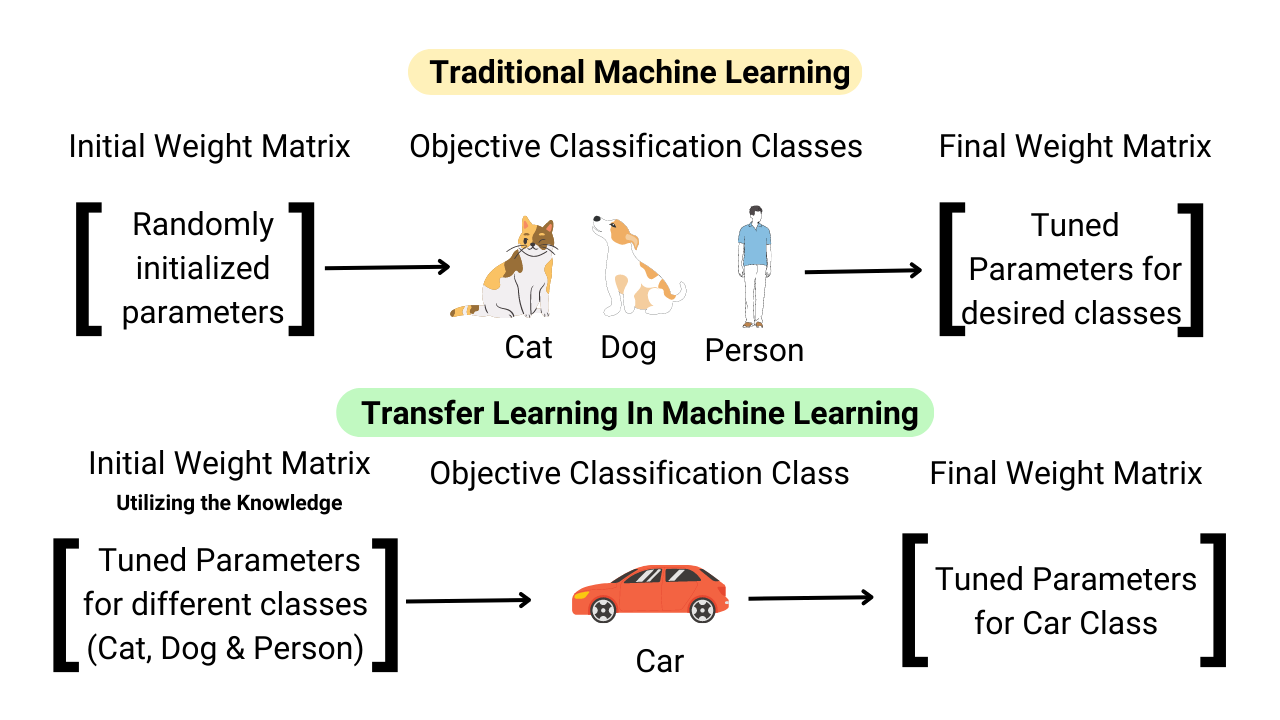

Transfer learning (fine-tuning) is a powerful machine learning technique where a model developed for one task is reused as the starting point for a model on a second task.

It leverages the knowledge gained while solving one problem and applies it to a different but related problem. This method is particularly useful when the amount of data available for the new task is limited.

The main difference between fine-tuning and transfer learning is that, in the case of fine-tuning, the task is similar, while in the case of transfer learning, the task is different. However, in my opinion, this distinction is not always crucial, so I prefer to use these terms interchangeably.

Pre-trained Model Approach

One common approach in transfer learning is to use a pre-trained model. Here’s how it works:

Step 1: Select Source Model

Choose a pre-trained model from available options. Many research institutions release models trained on large and challenging datasets, which can be used as the starting point.

Step 2: Reuse Model

The selected pre-trained model is then reused as the base for the new task. Depending on the specifics of the task, you might use the entire model or just parts of it.

Step 3: Tune Model

Finally, the model is fine-tuned on the new task’s data. This tuning process can involve adapting or refining the model based on the input-output pairs available for the new task.

When to Use Transfer Learning?

Transfer learning is particularly beneficial in the following scenarios:

- Limited Labeled Data: When there isn’t enough labeled training data to train a network from scratch.

- Similar Tasks: When there already exists a network pre-trained on a similar task, usually trained on massive amounts of data.

- Same Input: When the input for the new task is similar to the input for the pre-trained model.

In general, the benefits of transfer learning may not be obvious until after the model has been developed and evaluated. However, it often enables the development of skillful models that would be challenging to create without it.

Image Classification

Transfer learning is widely used in image classification tasks. Pre-trained models like VGG, ResNet, and EfficientNet, which are trained on large datasets like ImageNet, are fine-tuned for specific image classification tasks with smaller datasets.

Object Detection

In object detection, transfer learning helps improve detection accuracy by leveraging pre-trained models. Frameworks like Faster R-CNN and YOLO often use pre-trained backbones to enhance feature extraction.

Natural Language Processing (NLP)

Transfer learning is also prevalent in NLP. Pre-trained language models like BERT, GPT, and T5 are fine-tuned for various NLP tasks such as sentiment analysis, translation, and question answering.

Medical Image Analysis

In medical imaging, transfer learning is used to detect anomalies in MRI scans, CT scans, and X-rays. Pre-trained models are fine-tuned to identify specific medical conditions, aiding in diagnosis and treatment planning.